Knowledge Discovery in Databases creates the context for developing the tools needed to control the flood of data facing organizations that depend on ever-growing databases of business, manufacturing, scientific, and personal information.

AS WE MARCH INTO THE AGE of digital information, the problem of data overload looms ominously ahead. Our ability to analyze and understand massive datasets lags far behind our ability to gather and store the data. A new generation of computational techniques and tools is required to support the extraction of useful knowledge from the rapidly growing volumes of data. These techniques and tools are the subject of the emerging field of knowledge discovery in databases (KDD) and data mining.

Large databases of digital information are ubiquitous. Data from the neighborhood store’s checkout register, your bank’s credit card authorization device, records in your doctor’s office, patterns in your telephone calls, and many more applications generate streams of digital records archived in huge databases, sometimes in so-called data warehouses.

Current hardware and database technology allow efficient and inexpensive reliable data storage and access. However, whether the context is business, medicine, science, or government, the datasets themselves (in raw form) are of little direct value. What is of value is the knowledge that can be inferred from the data and put to use. For example, the marketing database of a consumer goods company may yield knowledge of correlations between sales of certain items and certain demographic groupings.

This knowledge can be used to introduce new targeted marketing campaigns with predictable financial return relative to unfocused campaigns. Databases are often a dormant potential resource that, tapped, can yield substantial benefits. This article gives an overview of the emerging field of KDD and data mining, including links with related fields and a definition of the knowledge discovery process.

Impractical Manual Data Analysis

The traditional method of turning data into knowledge relies on manual analysis and interpretation. For example, in the health-care industry, it is common for specialists to analyze current trends and changes in health-care data on a quarterly basis. The specialists then provide a report detailing the analysis to the sponsoring health-care organization; the report is then used as the basis for future decision making and planning for health-care management. In a totally different type of application, planetary geologists sift through remotely sensed images of planets and asteroids, carefully locating and cataloging geologic objects of interest, such as impact craters.

For these (and many other) applications, such manual probing of a dataset is slow, expensive, and highly subjective. In fact, such manual data analysis is becoming impractical in many domains as data volumes grow exponentially. Databases are increasing in size in two ways: the number N of records, or objects, in the database, and the number d of fields, or attributes, per object. Databases containing on the order of N=109 objects are increasingly common in, for example, the astronomical sciences. The number d of fields can easily be on the order of 102 or even 103 in medical diagnostic applications. Who could be expected to digest billions of records, each with tens or hundreds of fields?

Yet the true value of such data lies in the users’ ability to extract useful reports, spot interesting events and trends, support decisions and policy based on statistical analysis and inference, and exploit the data to achieve business, operational, or scientific goals.

When the scale of data manipulation, exploration, and inference grows beyond human capacities, people look to computer technology to automate the bookkeeping. The problem of knowledge extraction from large databases involves many steps, ranging from data manipulation and retrieval to fundamental mathematical and statistical inference, search, and reasoning.

Researchers and practitioners interested in these problems have been meeting since the first KDD Workshop in 1989. Although the problem of extracting knowledge from data (or observations) is not new, automation in the context of large databases opens up many new unsolved problems.

Definitions

Finding useful patterns in data is known by different names (including data mining) in different communities (e.g., knowledge extraction, information discovery, information harvesting, data archeology, and data pattern processing). The term “data mining” is used most by statisticians, database researchers, and more recently by the MIS and business communities. Here we use the term “KDD” to refer to the overall process of discovering useful knowledge from data. Data mining is a particular step in this process—application of specific algorithms for extracting patterns (models) from data. The additional steps in the KDD process, such as data preparation, data selection, data cleaning, incorporation of appropriate prior knowledge, and proper interpretation of the results of mining ensure that useful knowledge is derived from the data. Blind application of data mining methods (rightly criticized as data dredging in the statistical literature) can be a dangerous activity leading to discovery of meaningless patterns.

KDD has evolved, and continues to evolve, from the intersection of research in such fields as databases, machine learning, pattern recognition, statistics, artificial intelligence and reasoning with uncertainty, knowledge acquisition for expert systems, data visualization, machine discovery, scientific discovery, information retrieval, and high-performance computing. KDD software systems incorporate theories, algorithms, and methods from all of these fields.

Database theories and tools provide the necessary infrastructure to store, access, and manipulate data.

Data warehousing, a recently popularized term, refers to the current business trend of collecting and cleaning transactional data to make them available for online analysis and decision support. A popular approach for analysis of data warehouses is called online analytical processing (OLAP). OLAP tools focus on providing multidimensional data analysis, which is superior to SQL (a standard data manipulation language) in computing summaries and breakdowns along many dimensions. While current OLAP tools target interactive data analysis, we expect they will also include more automated discovery components in the near future.

Fields concerned with inferring models from data— including statistical pattern recognition, applied statistics, machine learning, and neural networks—were the impetus for much early KDD work. KDD largely relies on methods from these fields to find patterns from data in the data mining step of the KDD process. A natural question is: How is KDD different from these other fields? KDD focuses on the overall process of knowledge discovery from data, including how the data is stored and accessed, how algorithms can be scaled to massive datasets and still run efficiently, how results can be interpreted and visualized, and how the overall human-machine interaction can be modeled and supported KDD places a special emphasis on finding understandable patterns that can be interpreted as useful or interesting knowledge. Scaling and robustness properties of modeling algorithms for large noisy datasets are also of fundamental interest. Statistics has much in common with KDD.

Inference of knowledge from data has a fundamental statistical component . Statistics provides a language and framework for quantifying the uncertainty resulting when one tries to infer general patterns from a particular sample of an overall population. As mentioned earlier, the term data mining has had negative connotations in statistics since the 1960s, when computer based data analysis techniques were first introduced. The concern arose over the fact that if one searches long enough in any dataset (even randomly generated data), one can find patterns that appear to be statistically significant but in fact are not. This issue is of fundamental importance to KDD. There has been substantial progress in understanding such issues in statistics in recent years, much directly relevant to KDD.

Thus, data mining is a legitimate activity as long as one understands how to do it correctly. KDD can also be viewed as encompassing a broader view of modeling than statistics, aiming to provide tools to automate (to the degree possible) the entire process of data analysis, including the statistician’s art of hypothesis selection.

The KDD Process

Here we present our (necessarily subjective) perspective of a unifying process-centric framework for KDD. The goal is to provide an overview of the variety of activities in this multidisciplinary field and how they fit together. We define the KDD process as:

The nontrivial process of identifying valid, novel, potentially useful, and ultimately understandable patterns in data.

Throughout this article, the term pattern goes beyond its traditional sense to include models or structure in data. In this definition, data comprises a set of facts (e.g., cases in a database), and pattern is an expression in some language describing a subset of the data (or a model applicable to that subset). The term process implies there are many steps involving data preparation; search for patterns, knowledge evaluation, and refinement—all repeated in multiple iterations. The process is assumed to be nontrivial in that it goes beyond computing closed-form quantities; that is, it must involve search for structure, models, patterns, or parameters. The discovered patterns should be valid for new data with some degree of certainty. We also want patterns to be novel (at least to the system and preferably to the user) and potentially useful for the user or task.

Finally, the patterns should be understandable—if not immediately, then after some postprocessing. This definition implies we can define quantitative measures for evaluating extracted patterns. In many cases, it is possible to define measures of certainty (e.g., estimated classification accuracy) or utility (e.g., gain, perhaps in dollars saved due to better predictions or speed-up in a system’s response time). Such notions as novelty and understandability are much more subjective. In certain contexts, understandability can be estimated through simplicity (e.g., number of bits needed to describe a pattern). An important notion, called interestingness, is usually taken as an overall measure of pattern value, combining validity, novelty, usefulness, and simplicity. Interestingness functions can be explicitly defined or can be manifested implicitly through an ordering placed by the KDD system on the discovered patterns or models.

Data mining is a step in the KDD process consisting of an enumeration of patterns (or models) over the data, subject to some acceptable computational-efficiency limitations. Since the patterns enumerable over any finite dataset are potentially infinite, and because the enumeration of patterns involves some form of search in a large space, computational constraints place severe limits on the subspace that can be explored by a data mining algorithm.

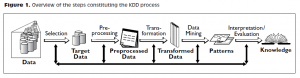

The KDD process is outlined in Figure 1. (We did not show all the possible arrows to indicate that loops can, and do, occur between any two steps in the process; also not shown is th e system’s performance element, which uses knowledge to make decisions or take actions.) The KDD process is interactive and iterative (with many decisions made by the user), involving numerous steps, summarized as:

1. Learning the application domain: includes relevant prior knowledge and the goals of the application

2. Creating a target dataset: includes selecting a dataset or focusing on a subset of variables or data samples on which discovery is to be performed

3. Data cleaning and preprocessing: includes basic operations, such as removing noise or outliers if appropriate, collecting the necessary information to model or account for noise, deciding on strategies for handling missing data fields, and accounting for time sequence information and known changes, as well as deciding DBMS issues, such as data types, schema, and mapping of missing and unknown values

4. Data reduction and projection: includes finding useful features to represent the data, depending on the goal of the task, and using dimensionality reduction or transformation methods to reduce the effective number of variables under consideration or to find invariant representations for the data

5. Choosing the function of data mining: includes deciding the purpose of the model derived by the data mining algorithm (e.g., summarization, classification, regression, and clustering)

6. Choosing the data mining algorithm(s): includes selecting method(s) to be used for searching for patterns in the data, such as deciding which models and parameters may be appropriate (e.g., models for categorical data are different from models on vectors over reals) and matching a particular data mining method with the overall criteria of the KDD process (e.g., the user may be more interested in understanding the model than in its predictive capabilities)

7. Data mining: includes searching for patterns of interest in a particular representational form or a set of such representations, including classification rules or trees, regression, clustering, sequence modeling, dependency, and line analysis

8. Interpretation: includes interpreting the discovered patterns and possibly returning to any of the previous steps, as well as possible visualization of the extracted patterns, removing redundant or irrelevant patterns, and translating the useful ones into terms understandable by users

9. Using discovered knowledge: includes incorporating this knowledge into the performance system, taking actions based on the knowledge, or simply documenting it and reporting it to interested parties, as well as checking for and resolving potential conflicts with previously believed (or extracted) knowledge.

Most previous work on KDD focused primarily on the data mining step. However, the other steps are equally if not more important for the successful application of KDD in practice. We now focus on the data mining component, which has received by far the most attention in the literature.

Source: COMMUNICATIONS OF THE ACM November 1996/Vol. 39, No. 11

rimunozc

View all posts by rimunozc